Whether you're looking for a new opportunity in software development or data analysis, or a hiring manager who will interrogate a candidate for a job opening in your company, knowing common programming interview questions and answers is a must.

Preparing for these questions is often a difficult and time-consuming process from both the employer and the candidate perspectives.

In this article, we'll examine 24 essential programming questions and answers for beginner, intermediate, and advanced practitioners. These questions and answers will help you better prepare for the interview and know what to expect from your interviewer/interviewee.

Basic Programming Interview Questions

Let’s start with some of the easiest and most common programming interview questions.

1. What is a variable in programming?

Variables are fundamental elements in programming. A variable is essentially a container that stores data, and its value can be changed during the execution of a program. Modern programming languages support various types of variables, each designed for specific data types and use cases.

2. Explain data types with examples

In programming, data types are the types of values that variables can store. Every data type comes with associated properties, which are essential to know which mathematical, relational, or logical operations can be performed.

For example, in Python, there are several numeric data types, including integers, that store whole numbers, and floats, which store values with decimal points. There are also strings, which store ordered sequences of characters enclosed in single or double quotes.

integer_var = 25 float_var = 10.2 String_var = "Welcome to DataCamp"

3. Explain the difference between compiled and interpreted languages

The main difference between compiled and interpreted languages is how the instructions, also known as algorithms, are translated into machine code (i.e., binary code). Compiled languages are translated before execution, while interpreted languages are translated at runtime.

That makes compiled languages more suitable for complex tasks requiring speed, such as real-time traffic tracking in autonomous cars. However, compiled languages, such as C and C++, tend to be more difficult to understand and work in than interpreted languages like Python.

4. What are conditionals and loops?

Conditional statements, commonly known as if-else statements, are used to run certain blocks of code based on specific conditions. These statements help control the flow of an algorithm, making it behave differently in different situations.

By contrast, a loop in programming is a sequence of code that is continually repeated until a certain condition is reached, helping reduce hours of work to seconds. The most common loops are for loops and while loops.

5. What is the difference between an array and a linked list?

Arrays and Linked Lists are among the most important data structures. They are structures that store information using different strategies.

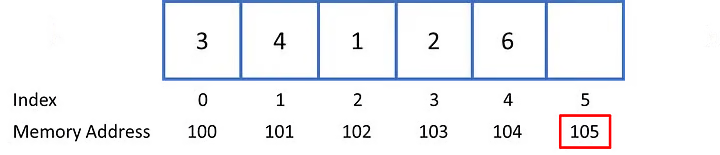

An array stores elements in contiguous memory locations, i.e., each element is stored in a memory location adjacent to the others. Further, the size of an array is unchangeable and is declared beforehand.

Array.

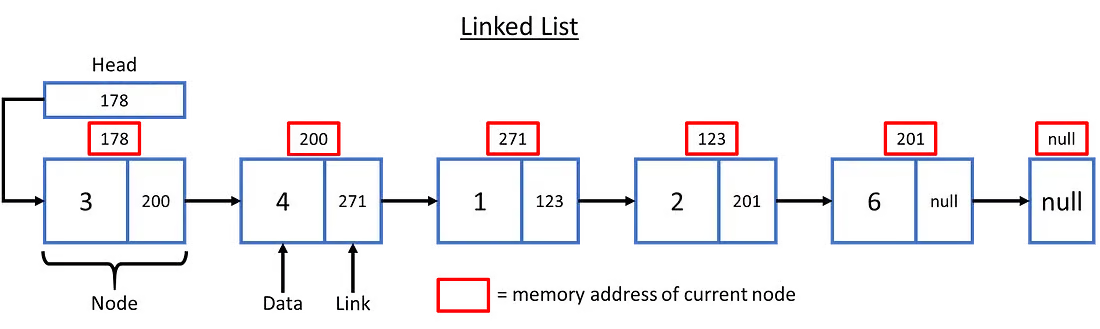

By contrast, linked lists use pointers to store the memory address of the next element, as shown below.

Linked list.

Overall, arrays are preferred when quick access to elements is needed, and memory is a concern, whereas linked lists are better in cases of frequent insertions and deletions.

6. Explain recursion with an example

In programming, recursion occurs when a function calls itself. A great example of recursion is a function designed to calculate the factorial of a number. Remember that the factorial of a non-negative integer n is the product of all positive integers less than or equal to n.

def factorial(n): if n < 2: return 1 else: return n * factorial(n-1) factorial(5) >>> 120

7. What are pointers, and how do they work?

A pointer is a variable that stores the memory address of another variable as its value. Commonly used in data structures like linked lists, pointers allow low-level memory access, dynamic memory allocation, and many other functionalities.

8. What is Big-O notation, and why is it important?

Big-O notation is a mathematical notation used to describe the complexity of algorithms. Big O Notation measures the worst-case complexity of an algorithm. It considers time complexity, i.e., the time taken by an algorithm to run completely, and space complexity, the extra memory space required by an algorithm. We explain to you the foundations of Big O notation in this tutorial.

Intermediate Programming Interview Questions

In this section, we focus on common questions for candidates preparing for mid-level roles with some experience.

Object-oriented programming interview questions

9. What are the four pillars of OOP?

Object-oriented programming is a paradigm that focuses on objects. OOP is based on the following four pillars:

- Abstraction. Abstraction in OOP allows us to handle complexity by hiding unnecessary details from the user. That enables the user to implement more complex logic on top of the provided abstraction without understanding or even thinking about all the hidden complexity.

- Encapsulation. This process of bundling your data and behavior into an object defined by a class is called encapsulation.

- Inheritance. This property allows for the creation of new classes that retain the functionality of parent classes.

- Polymorphism. Polymorphism is a concept that allows you to use the same name for different methods that have different behaviors depending on the input.

10. Explain the difference between class and object

In object-oriented programming, data and methods are organized into objects that are defined by their class. Classes are designed to dictate how each object should behave, and then objects are designed within that class.

11. What is polymorphism, and how is it implemented in Java/Python?

In OOP, polymorphism allows you to use the same name for different methods that have different behaviors depending on the input. This is commonly used in combination with inheritance.

For example, say we have a parent class called Shape that has a method to calculate the area of the shape. You may have two child classes, Circle and Square. While each will have the method called Area, the definition of that method will be different for each shape. Compare the methods for area for the different shapes in the code block below.

# Define the parent class Shape class Shape: # Initialize the attributes for the shape def __init__(self, name): self.name = name # Define a generic method for calculating the area def area(self): print(f"The area of {self.name} is unknown.") # Define the child class Circle that inherits from Shape class Circle(Shape): # Initialize the attributes for the circle def __init__(self, name, radius): # Call the parent class constructor super().__init__(name) self.radius = radius # Override the area method for the circle def area(self): # Use the formula pi * r^2 area = 3.14 * self.radius ** 2 print(f"The area of {self.name} is {area}.") # Define the child class Square that inherits from Shape class Square(Shape): # Initialize the attributes for the square def __init__(self, name, side): # Call the parent class constructor super().__init__(name) self.side = side # Override the area method for the square def area(self): # Use the formula s^2 area = self.side ** 2 print(f"The area of {self.name} is {area}.")

12. Explain the difference between inheritance and composition

Both inheritance and composition are techniques used in OOP to enhance code reusability. The former is the mechanism by which a new class is derived from an existing class, inheriting all of its properties and methods. The latter involves building complex objects by combining simple parts rather than inheriting from a base class.

Overall, composition offers several advantages over inheritance, including greater flexibility, reduced complexity, and improved maintainability.

Functional programming interview questions

13. What is functional programming?

In computer science, functional programming is a programming paradigm where programs are constructed by applying and composing functions. It is a subtype of the declarative programming paradigm.

Functional programming stands out because of its traceability and predictability. This is because the functions used are immutable, meaning they can’t be altered. Functions are defined, often in a separate section of the code (or sometimes in a different file), and then used throughout the code as needed. This attribute makes it easy to determine what is happening in a section of code, as the function performs the same way and is called in the same way everywhere.

14. What is the difference between imperative and declarative programming?

In terms of programming paradigms, there are two broad categories that many of the commonly used paradigms fall under: imperative and declarative programming.

At a high level, imperative programming is a category of paradigms in which the programmer spells out the exact instructions for the program to follow step-by-step. The focus is on how to execute the program. This style of programming paradigm could be thought of in terms of a flow chart, where the program follows a given path based on specified inputs. Most mainstream programming languages make use of imperative programming.

Declarative programming, by contrast, is a category of paradigms in which the programmer defines the logic of the program but does not give details about the exact steps the program should follow. The focus is on what the program should execute rather than precisely how. This type of programming is less common but can be used in situations where the rules are specified and the precise path to the solution is not known. An example might be to solve a number puzzle like Sudoku.

15. What are pure functions, and why are they important?

Pure functions are a core component of functional programming. Simply, a pure function is a process that takes input values and returns output values based only on the input values. Pure functions have no "side effects," meaning that they have no influence on other variables in the program, don’t perform writing to files, and don’t alter information stored in a database.

The nature of pure functions makes them the perfect companion for programmers. First, they are very easy to debug, for any pure function with the same set of parameters will always return the same value.

For the very same reason, pure functions are easier to parallelize, by contrast with impure functions, which can interfere in such a way that you get different results in different executions because they update or read mutable variables in a different order.

16. Explain higher-order functions with an example

In functional programming, by contrast with pure functions, a higher-order function is a function that either takes one or more functions as arguments (i.e., a procedural parameter, which is a parameter of a procedure that is itself a procedure), returns a function, or both.

A common example of a higher-order function is Python’s map(). Common in many functional programming languages, map()

takes as arguments a function f and a collection of elements and

returns a new collection with the function applied to each element from

the collection. For example, let’s say you have a list of numbers, and

you want a new collection with the numbers converted into floats.

numbers = [1,2,3,4] res = map(float, numbers) print(list(numbers)) >>> [1.0, 2.0, 3.0, 4.0]

Advanced Programming Interview Questions

Finally, let’s see some of the most common questions for experienced candidates targeting top tech companies.

Dynamic programming interview questions

17. What is dynamic programming, and when is it used?

Dynamic programming is a method used to solve complex problems by dividing them into smaller overlapping subproblems.

Instead of starting from scratch each time, you keep track of the solutions to those smaller parts, which means you don’t have to do the same calculations repeatedly. This method is very useful for finding the longest common subsequence between two strings or finding the minimum cost to reach a specific point on a grid.

18. Explain the difference between memoization and tabulation

Memoization and tabulation are two powerful techniques in dynamic programming to optimize the performance of algorithms, often recursive algorithms.

Memoization, also known as caching, involves storing the result of expensive function calls and returning the stored results whenever the same inputs occur again. In this way, subproblems are only computed once. Memoization follows a top-down approach, meaning that we start from the "top problem" and recurse down to solve and cache multiple sub-problems.

By contrast, tabulation involves computing all the smallest subproblems and storing the results in a table. It’s considered a bottom-up approach because it starts by solving the smallest subproblems, and once we have all of the solutions to these sub-problems, we compute the solution to the top problem.

19. Solve the Fibonacci sequence using dynamic programming

The Fibonacci sequence goes as follows: 0, 1, 1, 2, 3, 5, 8, 13, 21, 34 … where each number in the sequence is found by adding up the two numbers before it.

The most intuitive way of solving the problem is through recursion, as shown below.

# a simple recursive program for Fibonacci numbers def fib(n): if n <= 1: return n return fib(n - 1) + fib(n - 2)

However, this is not the most efficient way of finding the sequence. The previous algorithm has an exponential time complexity, noted as O(2^N), which means that the number of calculations increases exponentially as N increases.

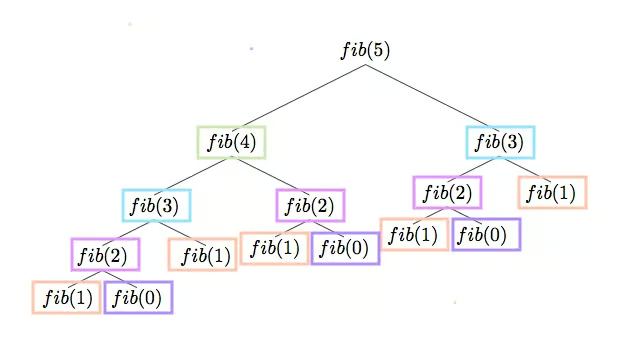

Another way of looking at the Fibonacci sequence problem is by splitting it into small subproblems, as follows:

Fibonacci sequence for fib(5).

As you can see, if we were to run our code for n=5, the fib() function calculates the same operation several times, resulting in a waste of computing resources.

Dynamic programming provides several techniques to optimize the calculation of the Fibonacci sequence.

Let’s analyze the problem with

memoization. As already mentioned, it involves storing the result of

expensive function calls and returning the stored results whenever the

same inputs occur again. This is achieved with the following code, which

stores the results of the function fibonacci_memo() in a

dictionary. With this optimization, the calculation of all the inputs is

only computed once, reducing time complexity to linear, noted as O(N).

cache = {0: 0, 1: 1} def fibonacci_memo(n): if n in cache: # Base case return cache[n] # Compute and cache the Fibonacci number cache[n] = fibonacci_memo(n - 1) + fibonacci_memo(n - 2) # Recursive case return cache[n] [fibonacci_memo(n) for n in range(15)] [0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377]

20. Explain the concept of optimal substructure and overlapping subproblems

In dynamic programming, subproblems are smaller versions of the original problem. Any problem has overlapping subproblems if finding its solution involves solving the same subproblem multiple times, like calculating the Fibonacci sequence above.

On the other hand, a problem is deemed to have an optimal substructure if the optimal solution to the given problem can be constructed from optimal solutions of its subproblems.

Programming technical interview questions

21. Explain how hash tables work

Hashmaps, also known as hashtables, represent one of the most common implementations of hashing. Hashmaps store key-value pairs (e.g., employee ID and employee name) in a list that is accessible through its index. We could say that a HashMap is a data structure that leverages hashing techniques to store data in an associative fashion.

The idea behind hashmaps is to distribute the entries (key/value pairs) across an array of buckets. Given a key, a hashing function will compute a distinct index that suggests where the entry can be found. The use of an index instead of the original key makes hashmaps particularly well-suited for multiple data operations, including data insertion, removal, and search.

22. What is a deadlock in multithreading?

Threading allows you to have different parts of your process run concurrently. These different parts are usually individual and have a separate unit of execution belonging to the same process. The process is nothing but a running program that has individual units that can be run concurrently.

Deadlock happens when multiple threads are indefinitely blocked, waiting for resources that other threads hold. This scenario leads to an unbreakable cycle of dependencies where no involved thread can make progress.

23. What is the difference between breadth-first search (BFS) and depth-first search (DFS)?

Breadth-first search (BFS) and depth-first search (DFS) are both graph traversal algorithms designed to explore a graph or tree.

BFS explores a graph level by level, visiting all nodes at the current depth before moving to the next. By contrast, DFS prioritizes exploring one branch as deeply as possible before backtracking to investigate alternative branches.

BFS is particularly useful when the goal is to find the shortest path in an unweighted graph. However, BFS can use a lot of memory, especially in wide graphs, because it must keep track of all of the nodes at each level. BFS is an excellent choice for social network analysis or simple routing problems.

DFS, on the other hand, is useful when the goal is to explore all possible paths or solutions, such as puzzle-solving or finding cycles in a graph. Unlike BFS, DFS doesn’t guarantee the shortest path. However, it is more memory-efficient, as it only keeps track of the current path.

24. What is the time complexity of quicksort and mergesort?

Merge sort works by recursively dividing the input array into smaller subarrays and sorting those subarrays, then merging them back together to obtain the sorted output. It has a linearithmic time complexity, noted as O(N log(N)).

In Big O notation, linearithmic time behaves similarly to linear time, meaning that if the amount of input data is doubled, the time it takes for merge sort to process the data will also double.

The quick sort algorithm uses a partition technique by choosing a value from the list called the pivot. All items smaller than the pivot will end at the left of the pivot, and greater elements at the right. Quick sort will be called recursively on the elements to the left and right of the pivot.

Quick sort has an exponential time complexity, noted as O(N^2), for the worst-case scenario, which occurs when the pivot choice consistently results in unbalanced partitions.

Conclusion

In this article, we've covered many programming interview questions spanning basic, intermediate, and advanced topics. From understanding the core concepts of data structures like arrays and linked lists to diving into more complex techniques from the disciplines of OOP, functional programming, and dynamic programming, we've explored the key areas that potential employers might inquire about.

0 Comments